It's almost a year now since I started using

Standards Based Grading ideas in my teaching. Actually it's almost a year since I started teaching! So how did SBG work out for this rookie teacher?

Hmm .. it's a bit ambitious. There are so many things to come to terms with as a new teacher, adding SBG to the mix might be pushing it - especially if you are the first teacher in your faculty to try it out. I took the plunge - probably a little foolishly -

SBG seemed just so right for so many reasons. I did at least have the sense to try a full implementation with only one year group.

Preparing SBG resources (quizzes, grading sheets) and the extra marking will take more time, something in very short supply in your first year, but it will help you develop some critical teaching skills. Creating and using a list of standards will ensure you know the content, help you focus on what is important to teach, and provide clear direction for your lesson planning. Using quizzes on a regular basis will give you continuous feedback how your teaching efforts are translating into actual, measured student learning. It's your first year - and you most definitely need the feedback - the sooner the better to help develop good habits. As a new teacher, you are likely still learning the basics of providing and receiving student feedback, so SBG will provide you a scaffold for these essential teaching skills. If you aren't using SBG, you will likely not find out until end-of-topic tests - which is too late to find out you were teaching it the wrong way.

Would I recommend SBG for new teachers in their first year? Cautiously - and only with support from your head teacher. Try it with only one class, carefully choosing a class that will not be overly testing your still nascent classroom management skills or content knowledge. If you don't have head teacher support or a class you feel confident with, then perhaps consider what I'm calling SBG-Lite for the first year.

SBG-Lite

While

faculty and legislative constraints prevented me using a full SBG implementation with all but two of my classes, I found that even in classes where I was not allowed to use SBG for end of semester grades, I used many SBG tools while teaching each topic. Even if you can't use SBG data to determine official school grades, the information gained about a student's learning

aligned to your standards provides rich data to include in written comments in school reports and in conversations with students and their parents.

So what does SBG-Lite look like? You still have standards, you still have quizzes, you still receive and give regular feedback on student mastery of the standards. The only real difference is it doesn't directly translate into end of semester grades. It's up to you to convince students their efforts to achieve mastery of standards will ultimately translate into better grades and that tracking standards and repeat attempts on quizzes are worth the effort. It's a harder sell - but you believe it - don't you? While SBG-Lite most likely doesn't help improve motivation and engagement to the same degree as a full SBG implementation (because it lacks the element of direct student control over their marks), it remains a helpful addition to the learning environment.

Of course I would like to be able to use a full SBG implementation with more of my classes, but sometimes you just have to adapt with your current system - especially when you are the new kid on the block. Pushing too hard will just trigger a response to shut down SBG in those classes where you can use it.

SBG and the challenge of "Working Mathematically"

A serious problem in most mathematics assessment practices, and consequently in most mathematics teaching practices, is

an overemphasis on procedural skills development, often at the cost of understanding, reasoning and problem solving. There is a risk that using SBG amplifies this focus on skills fluency - reducing learning mathematics to a list of skills to be evaluated.

But it doesn't have to be that way - it depends on the standards you use. My solution was to

label my standards with the "Working Mathematically" proficiencies (Understanding, Skills, Problem Solving and Reasoning). This helped me see the balance (or lack of it) in my learning outcomes as well as offering guidance as to the different types of learning strategies which help students master the different types of standards.

SBG: What worked ...

Can I categorically say SBG improved the results of my class? No. But I can say the class did well in their final results - most students mastered most of the standards, as shown in end of year tests, and I do know we had a very positive class environment - so I stand by a 'did no harm' claim while suggesting SBG may have led to better outcomes.

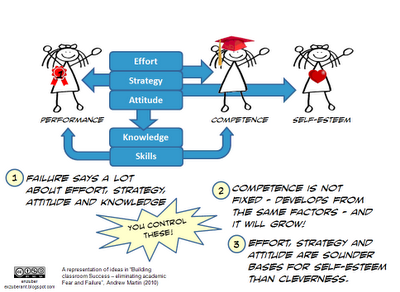

Students responded well to SBG - in their feedback they indicated they appreciated having more control over their grades. Many students and even some parents remarked at the end of the year how student attitudes to mathematics had changed from one of fear and anxiety to one of feeling able to do the work - and even enjoy it! SBG definitely helped me develop my teaching skills, providing an anchor in the maelstrom that is the the first year of teaching.

.. and what didn't work

Teaching for my first year was challenging - and that's an understatement. As the year progressed I found it harder to be consistent in my SBG implementation. In the rush to complete my teaching program, SBG sometimes fell by the wayside. I'm hoping with more experience this won't happen in 2012.

In the middle of the year, I tried using SBG with my youngest class, fresh from primary (elementary) school but I couldn't get the class in a 'learning about learning' space and I had to give up. I'm not sure if this was because SBG is too demanding for students of this age group, or if I need more experience working with younger students - possibly the latter. I'll find out this year because I'm trying SBG again with the same year level.

One of the central ideas of SBG - being able to reassess individual standards - started to slip - most likely because I didn't promote the link between quizzes and the end of year mark sufficiently - so as the year progressed, students didn't retake quizzes. One solution would have been to use a web-based tool like

ActiveGrade - unfortunately several factors conspired against this - not the least an inflexible attitude from my school district network administrators who deemed the application unsafe for schools (go figure).

Three ideas for 2012:

- SBG for the struggling, disengaged class: Like most mathematics faculties, we use so-called 'ability streaming' to allocate students to classes. As a consequence, we end up with entire classrooms of students with a well-established pattern of low mathematics achievement and high levels of disengagement, factors which typically get worse as the students progress through the school system. Previously I was concerned SBG would be too hard to manage for this type of class, but now I realise we have nothing to lose by trying something different. I'm going to try SBG-Lite with one of these classes (unfortunately full SBG isn't an option due to faculty grading policy for this year level).

- Provide better visibility of student standards achievement: At the close of each topic, I will provide each student and their parents with a view of their grade book, making it clear to them which quizzes they might wish to retake in order to improve their topic result.

- Leverage SBG through student summary books. I have designed workbooks made of one or two standards per page with lots of blank space between each standards where students can write their own summaries of what the standard means to them.

The verdict after the first year? SBG was a valuable and helpful tool - it's a core part of my teaching practice. I'm ramping it up for Year Two!